|

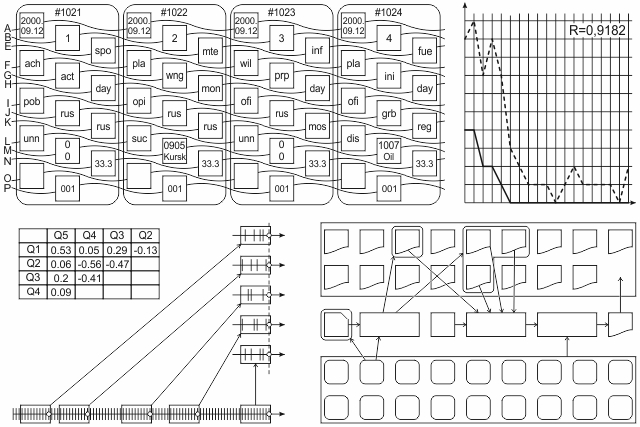

People often resort to intuition. However, if intuition isn't based on experience, it leads to mistakes. Digital intuition is collective wisdom gained during a long time period. A person can be warned about upcoming events predominantly occurring under similar circumstances. Importance Crowd opinion is based on little news which many people have become aware of. Governance is a technology which is based on experience. If public opinion is manipulated in a repeatable way, the algorithm will indicate anomaly and similarity. Suspicion is still not an accusation. However, when patterns are discovered, manipulators' reputation is destroyed. Digital intuition deprives manipulators of competitive advantage. If the tool is available and improved, then it is more rational, to be honest, and original. The Analytica.Today project is important for conspiracists and anti-conspiracists. If the interrelation between conflicts and natural phenomena is discovered, it means someone has such a blackmail instrument. This is interesting, and people's attention is a liquid commodity nowadays. It's only a matter of time to find interesting connections if you have a large amount of data. Message Sequence Analysis The invention subject is the technology for identifying statistical links in the sequence of news items, adverts, or other messages. Incoming messages are classified according to several attributes. Selective reclassification is used to account for different trait assessment interpretations. The messages converted into code form an estimator matrix. To detect a pattern in a message sequence on a timescale, it is necessary to compare matrix fragments which follow either before or after messages with the same assessment value according to one or more traits. The correlation dependence with the same data filter on the superimposed time segments is assessed. If the correlation dependence for two or more matrix fragments is high, the data filter becomes narrower. Data on settings and search results are stored in the database as a pattern. The examples discovered are assessed by a person for significance. A new or repeated pattern search starts with settings combining two or more known patterns with similar message codes. The patterns with high significance assessment are more often used to create combined search settings. The data filter is additionally extended using random values. Figuratively speaking, the pattern search criteria evolve by crossing, mutation, and selection. The analysis predictive power is expressed in the assessment of probability with which the new or probable message fits into the previously identified pattern. The past message sequence examples show what typically happens under similar circumstances. Technical analysis of news To make the system function, it is necessary to process a large archive of historical data. First, I collect data for the last 20 years, and Excel is my tool to achieve this objective. Based on the materials covering the first 20 years of the new millennium, it'll be possible to issue a review in "Namedni" style by Leonid Parfenov. I rate noticeable events from the news feed according to eight criteria which use 124 signs. Therefore, I get the matrix with nine dimensions for technical analysis. Using data obtained, I construct data volume variance graphs and derived function variance graphs with respect to time. It's possible to calculate graph correlations average values and detect local anomalies. To analyze it in detail, the selected message is superimposed on the timeline with similar events, and this is done in each category. Graphs correlation between the merged time intervals around similar events is assessed. The connections detected are enhanced by excluding the parameters weakening the connection from the graphs. We can easily explain many dependencies, but unexplained dependencies can also be found. If the news suspiciously fits into the previously identified sequence patterns, we get predictive power based on mathematics. If the comparison turned out to be interesting, it'll attract attention measured in the number of views. People's attention is the feedback for artificial intelligence which will automatically start searching for heuristic combinations. The result provides a news forecast. It is similar to a weather forecast which becomes more accurate when more complex algorithms are used. How to Predict News I predict that the software designed for searching patterns in different data arrays will appear in the two years' time. The Analytica.Team domain will feature a cloud version. An offline version will be also available. The data entry methods and feature set will adapt to carry out different tasks. Users will be able to create their own projects or participate in created startups. The Analytica.Team platform will allow hiring analysts, and analysts will find ways to earn on it. Visitors to the website Intuition.Digital will be able to evaluate patterns detected and give marks like "Crap", "Obvious", and "Eureka" swiping their fingers across the touchscreen. The greater the number of ratings is, the more interesting the result of artificial intelligence work becomes. Our goal is to find such links that humans can't even see. Analytica.Today news rating agency will offer news agencies to place a small banner next to the news items published. If a news item gains a certain number of views, the banner will display the pattern rating. The pattern rating is additional content for news agencies, and we will give it for free. Any reader can push the button to get additional information about patterns from the past. The business objective is to create information about information. We can sell advertising space or subscriptions. The cost of a single message manual processing is 2 Euros. Processing automation will reduce this cost by times. Global media market value amounts to trillions. In times of crises, trust in old experts declines. The significance of digital intuition will grow. Applications (horizon 2 to 10 years): * News Analysis and the reconstruction of factors affecting a personís opinion. Project development: October 2018. The idea

Violence-free funding mark indicates that the project has no support from a government, which is using a threat of violence to collect taxes. I'm spending as much time as I can.

|